Algorithmic Thermodynamics

Posted by John Baez

Mike Stay and I would love comments on this paper:

- John Baez and Mike Stay, Algorithmic thermodynamics.

We got into writing this because Mike has worked a lot on algorithmic information theory. Gregory Chaitin has described this subject as “the result of putting Shannon’s information theory and Turing’s computability theory into a cocktail shaker and shaking vigorously.” In particular, this theory has revealed a deep link between ‘algorithmic entropy’ and the notion of entropy used in information theory and thermodynamics.

The algorithmic entropy of a string of bits is a measure of much information that string contains. It’s a bit subtler than the length of the shortest program that does the job . But it’s close: the difference between these is bounded by a constant. Anyway, the idea is that a random string like this:

has more algorithmic entropy than one with a simple pattern, like this:

On the other hand, in thermodynamics we define the Gibbs entropy of a probability measure on a countable set to be

If we think of as a set of possible outcomes of an experiment, the entropy says how much information we gain — on average — by learning the result of the experiment, if beforehand all we knew was that each outcome happened with probability .

If the set of outcomes is a set of bit strings, or some other set of ‘signals’, Gibbs entropy has another name: Shannon entropy. Gibbs was interested in chemistry and steam engines. Shannon was interested in communication, and measuring the amount of information in a signal. But the math is the same. This is a great story in itself! If you don’t know this story, try the links.

On the other hand, algorithmic entropy seems really different. Why? Both algorithmic entropy and Shannon entropy can be used to measure the amount of information in bit strings. But algorithmic entropy can measure the information in a single bit string, while Shannon entropy applies only to probability measures on bit strings.

Nonetheless, there are deep relationships between these two kinds of entropy. So, when I started talking about thermodynamics as part of a big set of analogies between different theories of physics in week289, Mike naturally wondered if algorithmic information theory should become part of this story. And that’s how this paper was born!

Here’s the idea:

Abstract: Algorithmic entropy can be seen as a special case of entropy as studied in statistical mechanics. This viewpoint allows us to apply many techniques developed for use in thermodynamics to the subject of algorithmic information theory. In particular, suppose we fix a universal prefix-free Turing machine and let be the set of programs that halt for this machine. Then we can regard as a set of ‘microstates’, and treat any function on as an ‘observable’. For any collection of observables, we can study the Gibbs ensemble that maximizes entropy subject to constraints on expected values of these observables. We illustrate this by taking the log runtime, length, and output of a program as observables analogous to the energy , volume and number of molecules in a container of gas. The conjugate variables of these observables allow us to define quantities which we call the ‘algorithmic temperature’ , ‘algorithmic pressure’ and ‘algorithmic potential’ , since they are analogous to the temperature, pressure and chemical potential. We derive an analogue of the fundamental thermodynamic relation

and use it to study thermodynamic cycles analogous to those for heat engines. We also investigate the values of and for which the partition function converges. At some points on the boundary of this domain of convergence, the partition function becomes uncomputable. Indeed, at these points the partition function itself has nontrivial algorithmic entropy.

As the first sentence hints, one of the fun things we noticed is that algorithmic entropy is a special case of Gibbs entropy — but only if we generalize a bit and use relative entropy. They say “everything is relative”. I don’t know if that’s true, but it’s sure true for entropy. Here’s the entropy of the probability measure relative to the probability measure :

This says how much information we gain when we learn that the outcomes of an experiment are distributed according to , if beforehand we had thought they were distributed according to .

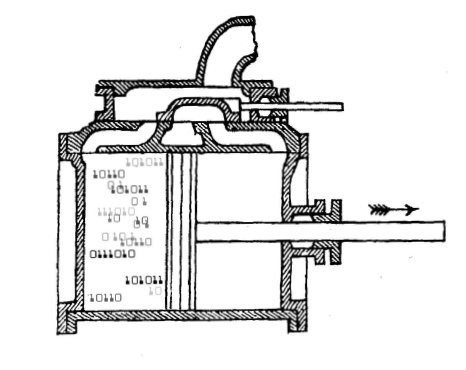

Another fun thing about this paper is how it turns around an old idea of Charles Babbage. His Analytical Engine was a theoretical design for computer powered by a steam engine. We describe a theoretical design for a heat engine powered by programs!

Re: Algorithmic Thermodynamics

Quoting from the paper:

“Charles Babbage described a computer powered by a steam engine; we describe a heat engine powered by programs! We admit that the significance of this line of thinking remains a bit mysterious. However, we hope it points the way toward a further synthesis of algorithmic information theory and thermodynamics. We call this hoped-for synthesis ‘algorithmic thermodynamics’.”

Though programs can be appreciated abstractly, I think people lose sight of the physical implementation of a program = unfolding of an stored electro-magnetic configuration on a hard drive. I think it was Bennett who said that a computable operation was no longer reversible after a release of heat had occurred.

This reminded of the other paper you guys collaborated on, something like: Does the HUP imply Godelian Incompleteness?