Bimonoids from Biproducts

Posted by John Baez

Today Jacob Biamonte and I were talking about Boolean circuits and we came across a cute fact I hadn’t noticed before. I’ll explain it, and then ask you to help me find a really slick proof.

But let’s start at the beginning. Suppose is a vector space. There’s a “diagonal map”

which goes like this:

There’s also an “codiagonal map”

which goes like this:

Yeah, it’s just addition.

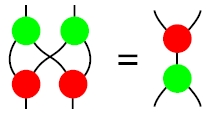

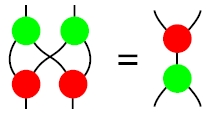

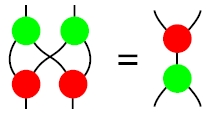

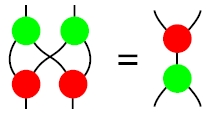

Now say we draw the diagonal map as a green blob with one input and two outputs, and the codiagonal map as red blob with two outputs and one input. Then this law holds:

Why?

Well, one reason is that it’s just true. Let’s look at it again:

On the left, we’re taking two vectors, duplicating each to get a total of four vectors, then swapping the middle two, then adding the first two and adding the last two:

On the right we’re taking two vectors, adding them, then duplicating the result:

So yeah, we’re getting the same answer: the law holds.

But that’s just the shallowest layer of understanding. We can get a bit deeper if we notice that the law we’re talking about is the compatibility condition for the multiplication and comultiplication in a bialgebra — or more generally, a bimonoid.

I suppose I should explain that a bit, though I seem to have said this a million times:

A monoid is an object with an associative multiplication and a unit. We can define a monoid in any monoidal category.

If is a monoidal category, so is the opposite category . A comonoid in is defined to be a monoid in . In other words: a comonoid is an object with a coassociative comultiplication and a counit.

A bimonoid is a gadget that’s both a monoid and a comonoid, where the monoid operations are comonoid homomorphisms — or equivalently, the comonoid operations are monoid homomorphisms! If you work out what this means, you’ll see that this law needs to hold:

where the green blob is the comultiplication, and the red blob is the multiplication. (Since we’re switching two wires, the concept of bimonoid only makes sense in a braided monoidal category.)

Now, can we use this abstract nonsense to understand what’s going on?

Yeah!

(You thought I was gonna say “No”? Then you were sorely wrong, my friend. That’s not how it works around here.)

Here are two famous facts:

- Any object in a category with finite products becomes a comonoid in a unique way.

- Any object in a category with finite coproducts becomes a monoid in a unique way.

These facts are dual to each other so we just need to understand one and we get the other for free. A category with finite products is just one with a terminal object where every pair of objects has a product. Given this, every object comes with a diagonal map and a map to the terminal object , and these make that object into a comonoid. With some more work, you can check that this is the only way to make it into a comonoid.

Dually, a category with finite coproducts is just one with an initial object where every pair of objects has a coproduct. Given this, every object comes with a codiagonal map and a map from the initial object , and these make that object into a monoid… and that’s the only way to do that.

But the category of vector spaces is particularly symmetrical and nice. The initial object is also the terminal object, so we call it a zero object. And the product of two objects is also their coproduct — and even better, a compatibility condition holds which makes us call the result a biproduct!

(The biproduct is what normal mortals often call the ‘direct sum’. Remember, we started out talking about vector spaces and direct sums. We still are.)

Let’s call a category with a zero object and where every pair of objects has a biproduct a category with finite biproducts. Then here’s the cute fact I hadn’t known:

- Any object in a category with finite biproducts becomes a bimonoid in a unique way.

It’s an easy calculation.

But here’s what I’m wondering now. First of all: people must already know this. James Dolan says he knew it. Does anyone know a good reference?

But secondly: is there an ultra-elegant way of deriving this cute fact from the two famous facts I listed before, using only abstract nonsense?

For example, I know that a bimonoid is a monoid in the category of comonoids, and vice versa. I also know that every category with finite biproducts is automatically enriched over the category of commutative monoids! Can you use ideas like this, together with the two famous facts listed above, to get the cute fact I learned today?

I should add that Jacob and I started out by thinking about vector spaces over the field with 2 elements. Then 0 is called “false”, 1 is called “true”, addition is called “exclusive or”, and this law:

is a fact about logic gates — or in other words, Boolean circuits. You can see this law drawn as a picture in figure 13 of Yves Lafont’s paper “Towards an algebraic theory of Boolean circuits”, which dates back to 2003. It was trying to understand this paper that led us down this train of thought.

Re: Bimonoids from Biproducts

As soon as I posted this and walked home through the sultry Singapore night, I realized why I’d gotten stuck in my attempts to find an ultra-elegant abstract nonsense proof of this fact:

I had been looking for one such proof — but in fact there are two, related by duality. And they’re both a lot more trivial than I was expecting.

Perhaps this clue will make the puzzle more fun without giving away my answer.