An Introduction to Linearly Distributive Categories

Posted by Emily Riehl

guest post by Brandon Baylor and Isaiah B. Hilsenrath

In the study of categories with two binary operations, one usually assumes a distributivity condition (e.g., how multiplication distributes over addition). However, today we’ll introduce a different type of structure, defined by Cockett and Seely, known as a linearly distributive category or LDC (originally called a weakly distributive category). See:

- J. R. B. Cockett and R. A. G. Seely. Weakly distributive categories. Journal of Pure and Applied Algebra, 114(2):133-173, 1997.

LDCs are categories with two tensor products linked by coherent linear (or weak) distributors. The significance of this theoretical development stems from many situations in logic, theoretical computer science, and category theory where tensor products play a key role. We have been particularly motivated to study LDCs from the setting of categorical quantum mechanics, where the application of LDCs supports a novel framework that allows the study of quantum processes of arbitrary dimensions (compared to well-known approaches that are limited to studying finite dimensions), so in addition to defining LDCs, we’ll use -autonomous categories to briefly touch upon the advantages LDCs offer in the context of quantum mechanics.

A Brief Introduction to Linear Logic

Before getting to the definition of LDCs, it is helpful to build intuition around the linear setting for which they are named. Note that this section is adapted from Priyaa Srinivasan’s 2021 thesis.

- P.V. Srinivasan. Dagger Linear Logic and Categorical Quantum Mechanics. PhD thesis, Department of Computer Science, University of Calgary, 2021.

Linear logic was introduced by Girard in 1987 as a logic for resource manipulation. Linear logic can be viewed as a refinement of other logic systems in that it joins the dualities of classical logic with the constructive properties of intuitionistic logic. Operationally, this means linear logic isn’t about expanding “truths” but manipulating resources that can’t be duplicated or thrown away at will.

Take, for example, the following statements, and :

The compound statement “” means that if a dollar is spent then an apple can be bought. One can have either a dollar or an apple at a given time but not both. Once the dollar is spent, is no longer true until another dollar obtained. Hence, the linear sensitivity of this logic is illustrated by a series of resource transformations.

The fragment of linear logic we are concerned with is known as multiplicative linear logic (MLL), which has connectives (, 1, , ). These connectives correspond to conjunction/disjunction as well as “and/or” from classical logic. The statement (read as “A times B” or “A tensor B”), for example, allows one the means to access both resources at the same time (e.g., if is an orange, then “” means both an apple and an orange are available to purchase at the same time). The formula 1 is the multiplicative truth of this fragment ().

(read as “par”), on the other hand, is the dual to and acts as the multiplicative disjunction. (One should beware the notation for par introduced by Cockett and Seely conflicts with Girard’s original notation; we will stick with Cockett and Seely’s version for this post.) In this case, is the dual to 1 (i.e., false). Linear logic also has an additive fragment and exponential operators (which allow linear logic to deal with non-linearity), but we won’t be mentioning them in detail here as our focus is on the binary operations of the multiplicative fragment.

A proof of a statement in linear logic may be regarded as a series of resource transformations. More generally, the proof theory used to define linear logic is derived from a Gentzen-style sequent calculus. A formal proof in this calculus is a sequence of so-called “sequents” that explicitly record dependencies line-by-line (i.e., each sequent is derivable from sequents appearing earlier in the sequence using logical or structural inference rules).

In the sequent calculus of linear logic, each line of a proof is written in the general form of . The sequent places a context (thought of as a collection of resources) on each side of the turnstile, thereby separating the assumptions on the left-hand side from the propositions on the right-hand side. In this way, linear logic is often considered as a two-sided logic. We offer a few simple examples to illustrate the nature of this logic system and highlight key points that led to the development of categorical semantics and LDCs based on this sequent calculus.

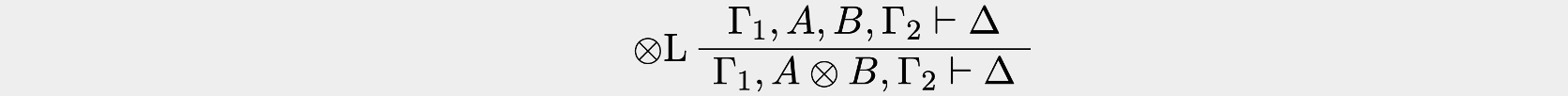

Left introduction rule for

Consider a string or sequence of propositions, where and are collections of resources.

The structural rules are for rewriting either the left-hand side or right-hand side of the sequent. For example, one can combine the terms on the left-hand side by tensoring them.

In the notation above, the top line can be thought of as the premise and the bottom line the conclusion. In this case, the left introduction rule is an inference rule that introduces a logical connective () in the conclusion of the sequent. By introducing tensor on the left-hand side of the turnstile, the conclusion means one needs both resources and to produce .

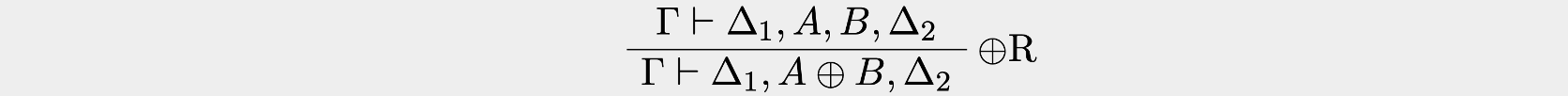

Right introduction rule for

The same introduction rules can be done with par, but for par, the collection of resources must be combined on the right-hand side of the turnstile (i.e., when left of the turnstile, the sequence is conjunctive; when right of the turnstile, the sequence is disjunctive).

There are also elimination rules, which are dual to the introduction rules, but we will not be elaborating on them here.

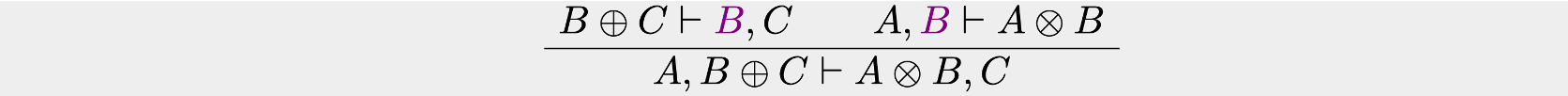

Cut rule

The cut rule allows formulae to be “cut out” and the respective derivation joined. In the example below, the formula is highlighted to show where the cut will take place.

With this inference rule, one can combine sequents based on their shared boundary, such as in the example above, where the formula is “cut out” and used to stick together the constituent premises to form a composite conclusion. Category theorists may recognize the cut rule, as it is just like composition. This begs the question if there is a categorical structure for this…

Linearly Distributive Categories

Definition of LDCs

To recap, we have covered the basics of the multiplicative fragment of linear logic (or MLL), we gave its resource-theoretic interpretation, and we briefly touched upon its Gentzen-style sequent calculus. But while sequent calculus is a versatile framework for MLL, as Priyaa Srinivasan writes in her 2021 thesis, “[p]roofs in sequent calculus often contain extraneous details due [to] the sequential nature of the calculus.” For instance, when applying rules to a sequent, an order of application needs to be specified, even if the rules are independent. This begs for an equivalent but notationally cleaner formulation of MLL, which is precisely what we will explore in this section. Specifically, we will explore linearly distributive categories (LDCs), which were first outlined in Cockett and Seely’s 1997 paper under the name weakly distributive categories. The incredible discovery of Cockett and Seely’s paper is that it recognizes that the fundamental property of linear logic is a kind-of “linearization” of the traditional distributivity of product over sum now called a linear distributor. However, we will not prove this fact here, directing the interested reader to the first section on polycategories and Theorem 2.1 in Cockett and Seely’s 1997 paper. Instead, we will take the fact that LDCs model MLL on faith, and only focus on exploring LDCs, so without further ado, we define LDCs.

A linearly distributive category (or a weakly distributive category) is a category with two monoidal structures where is a monoidal tensor called “times” with a monoidal unit called “top” or “true,” and is a monoidal tensor called “par” with a monoidal unit called “bottom” or “false.” Since the times is monoidal, it is equipped with the three familiar natural isomorphisms called the times associator, the left times unitor, and the right times unitor, respectively, which (of course) satisfy the monoidal triangle and pentagon equations. Likewise, as the par is also monoidal, we have another set of three natural isomorphisms called the par associator, the left par unitor, and the right par unitor, respectively.

However, there is nothing particularly interesting about two independent monoidal structures in the same category; what makes LDCs interesting is the interaction between these two monoidal structures, which consists of so-called linear distributors. Specifically, the times and par are linked by the two natural transformations which are called the left and right linear distributors, respectively, and these distributors are required to satisfy a couple dozen coherence conditions, as specified in section 2 of Cockett and Seely’s 1997 paper. While these maps have “distributor” in their name, that is pretty much the end of and ’s relation to distributive categories. Distributivity does not guarantee the existence of left and right distributors, and as Cockett and Seely found, the two tensors of a distributive category form an LDC if and only if the category is a preorder, making LDCs and distributive categories practically orthogonal constructs. The important thing to note is that, unlike for the traditional distributor, the object can only “attach” itself to one of the arguments of the sum . To give the resource-theoretic intuition behind this, one can imagine that the object represents a waiter, Alice, at a restaurant, and objects and represent two seated guests, Bob and Carol, at different tables. Alice can assign herself to serve either Bob (i.e., perform ) or Carol (i.e., perform ), but not both simultaneously. It is also important to note that these linear distributors do not in general represent an equality and are not invertible. So continuing our analogy, once Alice has assigned herself to either Bob or Carol, Alice cannot go back to a state where she can make that choice again, i.e., a state where she can switch the guest she is assigned to.

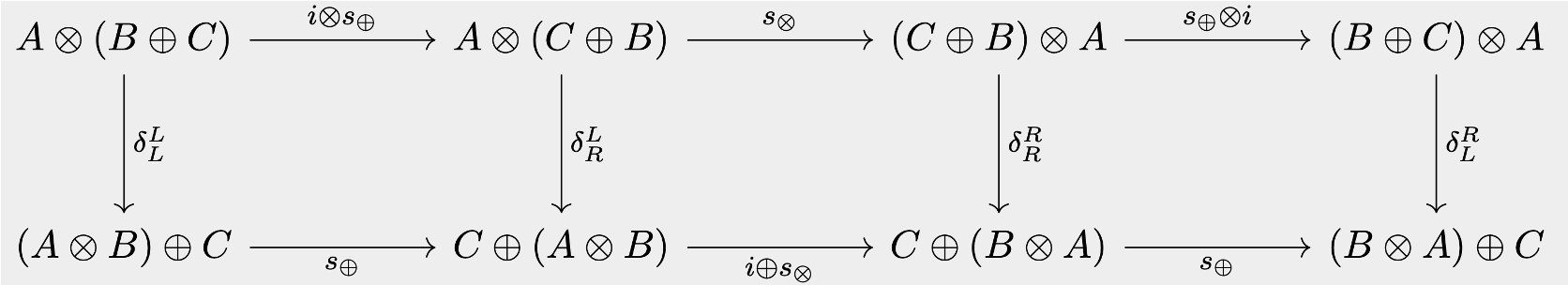

In addition to the aforementioned two linear distributors, the LDC can also have the two permuting linear distributors When our LDC has the and natural transformations, it is called a non-planar LDC. Note that if the times and par of the LDC are symmetric, we can create the permuting linear distributors and from the traditional linear distributors and with the times and par braidings (denoted by and , respectively), making the LDC automatically a non-planar LDC.

Graphical Calculus for LDCs

Note that this subsection and its figures are adapted from Cockett, Comfort, and Srinivasan’s 2021 paper on dagger linear logic.

- J.R.B. Cockett, C. Comfort, and P.V. Srinivasan, “Dagger linear logic for categorical quantum mechanics,” Logical Methods in Computer Science Volume 17, Issue 4, 10.46298/lmcs-17(4:8)2021, 2021.

Now that we described the basics of LDCs, you at this point may be asking what, then, makes LDCs so notationally special. Certainly, there is nothing particularly striking in the definition: an LDC is just two intertwined monoidal structures. But, it is precisely this structure that creates a rich graphical calculus (similar to monoidal graphical calculus), which provides us a versatile framework to perform entirely graphical manipulations on problems in the multiplicative fragment of linear logic. We direct the reader to Blute, Cockett, Seely, and Trimble’s 1996 paper Natural deduction and coherence for weakly distributive categories for a complete and rigorous discussion on the graphical calculus. Nevertheless, we will briefly discuss this graphical calculus here to give the reader some familiarity with the subject.

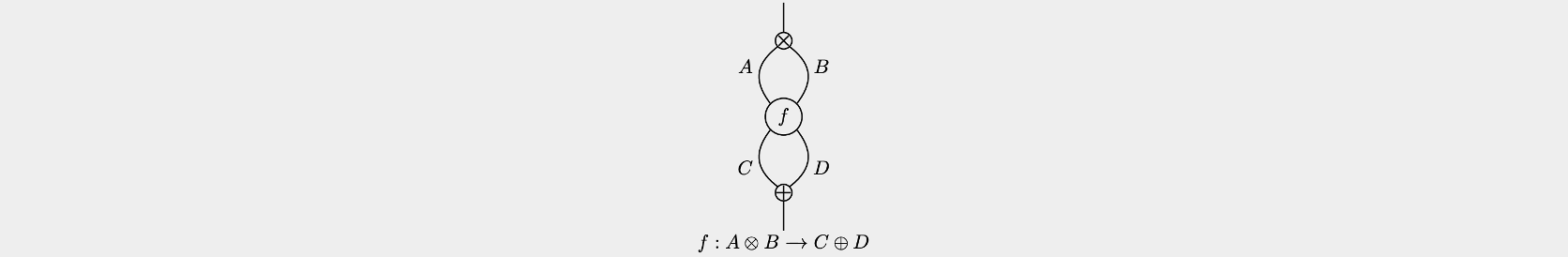

To start off, in the graphical calculus for LDCs, wires represent objects, circles represent morphisms, composition is done by stacking morphisms vertically, and we read diagrams from top to bottom. Recall that in general, sequents in linear logic (or morphisms in the LDC) go from tensored formulae (or objects in the LDC) to “par”ed formulae, so the input wires of a morphism are tensored (with ), and the output wires are “par”ed (with ). For instance, is denoted graphically by

The comonoid-like shape  at the top of the diagram represents -elimination, and what it denotes is the process of taking a tensor product like and splitting it up, with exiting through one wire and exiting through the other, allowing us to hypothetically operate on and independently. The monoid-like shape

at the top of the diagram represents -elimination, and what it denotes is the process of taking a tensor product like and splitting it up, with exiting through one wire and exiting through the other, allowing us to hypothetically operate on and independently. The monoid-like shape  at the bottom of the diagram, on the other hand, represents -introduction, and it denotes taking two objects, say and , and “par”ing them, resulting in the combined object exiting the process. In addition to these, we have an analogous -elimination, which is denoted graphically

at the bottom of the diagram, on the other hand, represents -introduction, and it denotes taking two objects, say and , and “par”ing them, resulting in the combined object exiting the process. In addition to these, we have an analogous -elimination, which is denoted graphically  , and -introduction, which is denoted graphically by

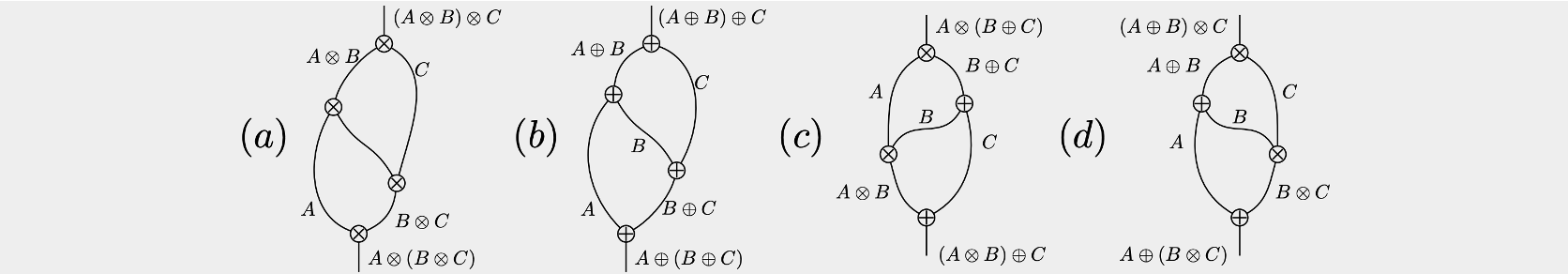

, and -introduction, which is denoted graphically by  . With -elimination, -introduction, -elimination, and -introduction, we denote graphically the -associator , the -associator , the left linear distributor , and the right linear distributor as

. With -elimination, -introduction, -elimination, and -introduction, we denote graphically the -associator , the -associator , the left linear distributor , and the right linear distributor as

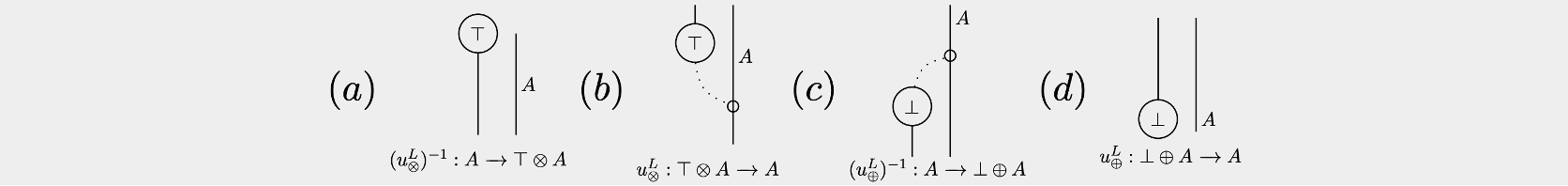

The final building blocks of this graphical calculus are those for the unitors, which are drawn as

The diagram in (a) is called the left -introduction, the diagram in (b) is called the left -elimination, the diagram in (c) is called the left -introduction, and the diagram in (d) is called the left -elimination. There is an analogous set of four diagrams for the right -unitor and the right -unitor which are just horizontal reflections of the four diagrams above.

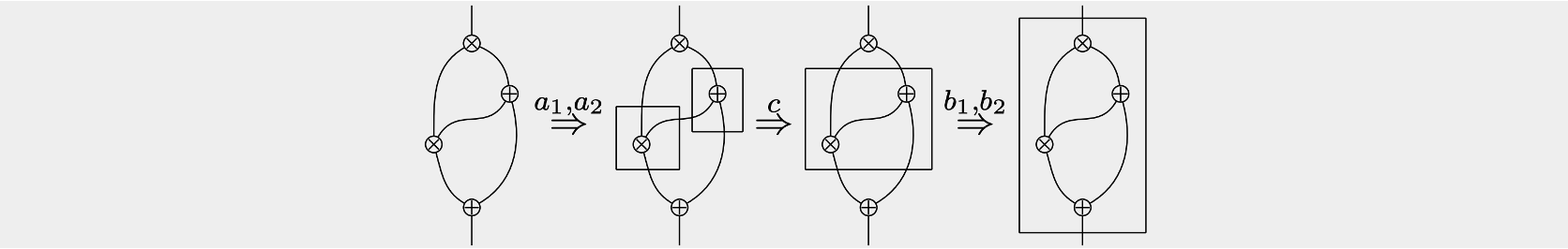

Once a morphism is expressed as a circuit using the building blocks above, there are numerous graphical manipulations one can perform, just as in monoidal graphical calculus. We refer the reader to Blute, Cockett, Seely, and Trimble’s 1996 paper to see these graphical manipulations. However, it is very important to note that not every circuit that can be constructed from these building blocks represents a valid morphism in our LDC. To check if a circuit is valid, one needs to show that the entire circuit can be enclosed in a single “box” by successfully using the rules - listed below:

For example, applying the boxing algorithm to the left linear distributor , we can see that the circuit can be encapsulated in one box, making it a valid circuit.

But the reverse circuit cannot be “boxed” because a -elimination cannot be absorbed by a box above it and -introduction cannot be absorbed by a box below it.

This shows us why the inverse of the left linear distributor is not in general possible, but it is important to note that this does not mean that there does not exist any LDC with an inverse to . In the -shifted tensor example below, , which is invertible by definition.

LDC Example: The -Shifted Tensor

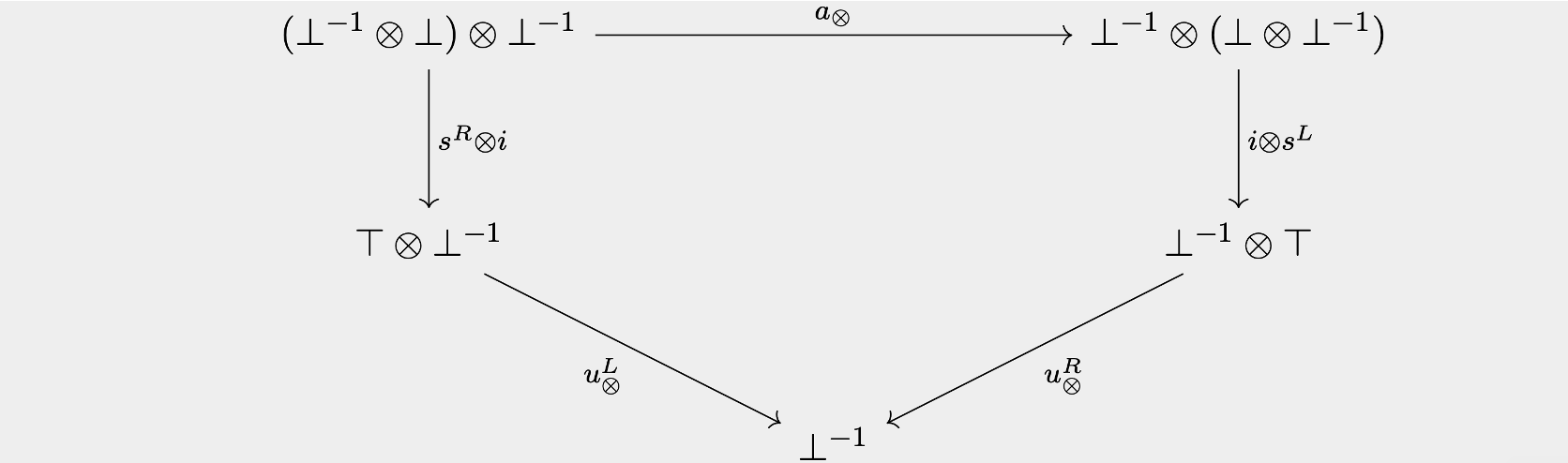

Now that we have defined the LDC, let us explore a simple example of one. In this section, we explore the -shifted tensor. To start off, we assume we are given a category with a monoidal structure with a tensor inverse, which is an object such that there exists an object and two isomorphisms such that the diagram below commutes.

Note that at this point, we do not have the ingredients to declare that is an LDC. Namely, we are missing a second monoidal structure (i.e., the par ) and linear distributors (i.e., and ), so in order to show that is an LDC, we need to construct the par and the linear distributors, which we are able to do with the tensor inverse along with its corresponding isomorphisms and .

We choose to define our par tensor by and we will take to be the unit for the tensor. This tensor we call the -shifted tensor. We then define the associator , left unitor , and right unitor for par using the natural isomorphisms from the monoidal structure and the tensor inverse’s isomorphisms and , as shown below. (Note that the equalities below are of the natural transformations, not the objects.) Using the monoidal triangle and pentagon equations for and the coherence diagram for the tensor inverse’s isomorphisms and , we can show that our constructed structure satisfies the monoidal triangle and pentagon equations, giving us a second monoidal structure. But are there linear distributors between these two monoidal structures? In fact, there do exist linear distributors for this category, and looking at the necessary domains and codomains, it is not too hard to see that they are just associators. We can define a left and a right linear distributor by These maps satisfy all the LDC coherence conditions, making any monoidal category with a tensor inverse an LDC!

-Autonomous Categories

The Negative Object Map

Now that we have covered a categorical framework for the multiplicative fragment of linear logic via LDCs, we are interested in adding into this framework a notion of negation, and this is precisely what we will explore in this section. In this brief section, we will study -autonomous categories, which provide a categorical presentation of MLL with negation. But before we treat that, we will begin by just adding two negation functions on the objects of an LDC, so without further ado:

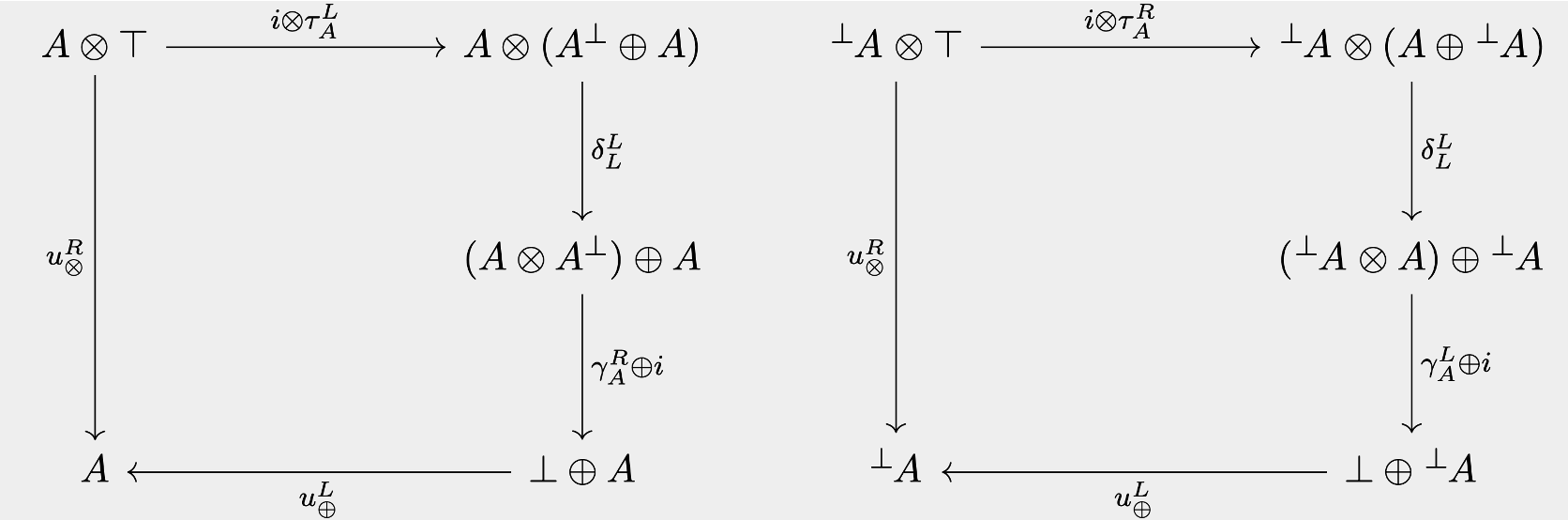

A linearly distributive category with negation is a regular LDC with two functions on objects of , which we denote by and , where we have the parameterized families of morphisms These morphisms must satisfy the coherence conditions where, for instance, the commutative diagrams for the first (left) and third (right) coherence conditions are

While reading this definition, you may have noticed something rather odd: nowhere in the definition of an LDC with negation do we require that and be functors. In fact, we do not even request that and act on morphisms at all; we only require that these maps act on objects. The reason for this is that an LDC with negation has sufficient structure to make and into functors without requiring this a priori. Specifically, if for any morphism in , we define by then extends to a contravariant functor. A similar definition makes into a contravariant functor.

Now that we have an LDC with negation, let us better understand how this structure implements a notion of negation. The key lies in the four parameterized families of morphisms , , , and . To give intuition as to why this is the case, let us treat & as “not,” as “true,” as “false,” as “and,” and as “or.” Then with this interpretation, reads as “not and implies false,” reads as “ and not implies false,” reads as “true implies not or ,” and finally, reads as “true implies or not ,” implying that the morphisms & encode in our LDC a notion of “contradiction,” and the morphisms & encode “tertium non datur,” giving our LDC a notion of negation.

To continue our discussion of how our “LDC with negation” models logical negation, we need one formal property of LDCs with negation, which is that they have the following adjunctions. The proof of this is not too hard, but we will only provide a sketch of the proof that is left adjoint to for brevity. To do this, we need to find a unit and counit for the adjunction. In this case, the unit is given by and the counit is given by for all . Now that we have proposed a unit and counit for the adjunction, we need to show that they satisfy the triangle identity, but doing so necessitates a quite lengthy diagram chase, so we refer the reader to Lemma 4.2 in Cockett and Seely’s 1997 paper to see the commutative diagram that proves this.

The reason we mentioned this property of LDCs with negation is because these adjunctions give us the following bijections.

Continuing our intuition from before, we can interpret the top-left bijection as telling us that “ and implies ” is equivalent (or more precisely, isomorphic) to “ implies not or ,” which is exactly how we would expect negation to work in logic. Similarly, the top-right bijection tells us that “not and implies ” is equivalent to “ implies or ,” which is again exactly what we would expect from negation in logic. And so on.

Definition and Graphical Calculus for -Autonomous Categories

Now that we have encoded negation into our LDC, we will define an interesting type of LDC with negation: a case where we only need one negation map . This type of LDC is called a symmetric linearly distributive category with negation, which is a regular LDC where the and monoidal structures have symmetric braidings, which we denote by and , respectively, such that the diagram below commutes.

In addition to the symmetric braidings and , the symmetric LDC with negation has a function defined on the objects of along with the following morphisms for every object : These induce the following families which together satisfy the following main coherence conditions (as well as related coherence conditions that arise from fundamental symmetries of non-planar LDCs). It turns out (as is shown in Cockett and Seely’s 1997 paper) that a symmetric linearly distributive category with negation is completely equivalent to a -autonomous category, giving us another axiomatization of -autonomous category that is, in many instances, easier to prove. In the next section, we will give an example of a category that is -autonomous, which we will prove by showing that it is a symmetric LDC with negation.

But before we continue on to that example, we will take a brief digression to look at the coherence conditions of the symmetric LDC with negation. On the face of it, these two coherence conditions look complicated and uninteresting. However, when we draw the equations in our graphical calculus for LDCs (suppressing units), we get a remarkable structure, which is shown below.

To the reader who is familiar with monoidal graphical calculus, you will instantly recognize these as the “snake” or “yanking” equations for compact closed monoidal categories, which allow curved lines in a diagram to be straightened. Hence, a symmetric LDC with negation (or a -autonomous category) has a notion of yanking curved lines straight in the graphical calculus for LDCs. However, a -autonomous category is not a compact closed monoidal category, and and are not the same as the unit and counit of the dual for a compact closed monoidal category, as maps to the monoidal unit of par and maps from the monoidal unit of times , and these two monoidal units and are not in general equal. This makes -autonomous categories very interesting for studying infinite-dimensional quantum mechanics. To elaborate on the reason: the snake equations have been extensively used in finite-dimensional quantum mechanics, which can be modeled by a compact closed monoidal category FdHilb. Unfortunately, once you move from finite dimensions to infinite dimensions, the monoidal category Hilb is no longer compact closed. However, with a -autonomous category, it becomes possible to create a notion of “yanking” equations straight without the category being compact closed, which is a promising feature for modeling infinite-dimensional quantum mechanics. This is still a very active area of research, and we direct the interested reader to Priyaa Srinivasan’s thesis for a comprehensive treatment on the topic.

-Autonomous Categories Example: The Abelian Group

In the prior section, we introduced -autonomous categories (well actually, we introduced the equivalent notion of symmetric LDCs with negation), so in this section we are going to explore an example of one: an abelian group. Let us consider an abelian group , where we choose at random one of the elements in , which we denote by . We can interpret this abelian group as a symmetric monoidal category, where we take the objects of to be the objects of the category, we take to be the monoidal tensor, we take to be the monoidal unit, and we say there is a morphism to for if and only if (which implies that all diagrams commute automatically by transitivity!). So, for example, because of this interpretation and the fact that is an abelian group, we know our induced category has an associator, as for all ; a left unitor, as for all ; a right unitor, as for all ; and a symmetric braiding, as for all . The fact that the pentagon and triangle equations are satisfied is immediate via transitivity as we mentioned above, thereby justifying our earlier statement that our abelian group is really just a symmetric monoidal category. In fact, it is a monoidal category with a tensor inverse , but let us ignore that fact for the remaining discussion, as we want to work out this example from scratch.

We will take the monoidal structure to be the par monoidal structure for the LDC. But we still need to find a second monoidal structure for the times. Let us define by , where is the inverse of , and let us take to be its unit. Clearly, for all , so our binary operator , with as its unit, has a left unitor, a right unitor, an associator, and a symmetric braiding, giving us our second symmetric monoidal structure, which we will take to be the times monoidal structure for the LDC.

Now, we need linear distributors. Since, for all , our category has a left linear distributor and a right linear distributor. The coherences are immediately satisfied via transitivity, so our abelian group with a designated invertible element is an LDC. Since our par and our times have symmetric braidings, to show that our category is -autonomous, all we need to show is that we have a map on objects such that there exists a “contradiction” map and a “tertium non datur” map for all . Let us define , where is the inverse of , for all . Then, for all , which implies that and exist. Thus, the abelian group is a -autonomous category.