This Week’s Finds in Mathematical Physics (Week 243)

Posted by John Baez

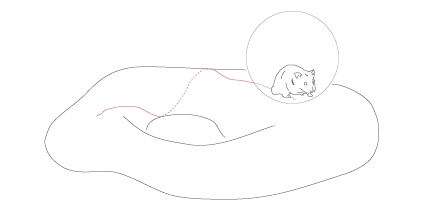

In week243 of This Week’s Finds, hear about Claude Shannon, his sidekick Kelly, and how they used information theory to make money at casinos and the stock market. Hear about the new book Fearless Symmetry, which explains fancy number theory to ordinary mortals. Learn about the Dark Ages of our Universe, and how they were ended by the earliest stars. And finally, get a taste of Derek Wise’s work on Cartan geometry, gravity… and hamsters!

Posted at December 26, 2006 1:55 AM UTC

Re: This Week’s Finds in Mathematical Physics (Week 243)

”.. interesting reading.. ” well, hmm..

Merry Christmas!!