The Kantorovich Monad

Posted by Simon Willerton

guest post by Paolo Perrone

On this blog there has been quite a number of posts about the interaction of category theory and probability (for example here, here, here, and here), as well as about applying category theory to the study of convex structures (for example here, here, and here).

One of the central constructions in categorical probability theory is that of probability monad. A probability monad can be thought of as a way to extend spaces in order for them to include, besides their elements, also their random elements.

Here I would like to talk about a probability monad on the category of metric spaces: the Kantorovich monad. As some of you may suspect, this is related to Kantorovich duality, which appeared on this blog in the context of enriched profunctors. The Kantorovich monad was introduced by Franck van Breugel for compact metric spaces in his note The Metric Monad for Probabilistic Nondeterminism (pdf). In the work that resulted in my PhD thesis (pdf), Tobias Fritz and I extended the construction, and discovered some very particular properties of this monad. In particular, this monad can be described purely in terms of combinatorics of finite sequences of elements! Most of the work explained in this post can be found in the paper by me and Tobias:

- A probability monad as the colimit of spaces of finite samples, Theory and Applications of Categories vol. 34, 2019.

A quick introduction to monads

If you are already familiar with monads, you may skip this section. For everybody else, a monad on a category is one of the most important constructions in category theory. It consists of the following (subject to axioms you can find at the above link):

- an endofunctor on our category;

- a natural transformation , called the multiplication of the monad;

- a natural transformation from the identity on to , called the unit of the monad.

A way to interpret and motivate the construction is the idea that a monad is like a consistent way of extending spaces to include generalized elements of a specific kind.

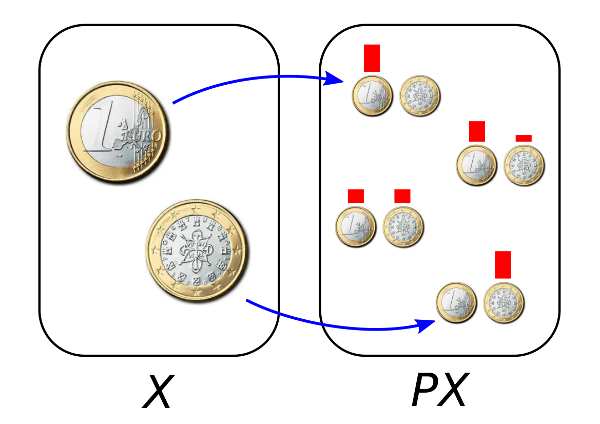

- The endofunctor takes a space and gives a new space which can be thought of as an extension of .

- The unit natural transformation gives embeddings from the old spaces into their extensions . In other words, the new extended spaces have to contain the old ones.

- The multiplication natural transformation takes a extended extended space (twice) and maps it back to a one-time extended space.

As an example, take the power set monad on the category of sets.

- The endofunctor takes a set and gives the set of its subsets. Subsets can be considered a generalization of elements.

- The unit gives maps which assign to the singleton . In this sense a subset is more general than an element: the elements correspond precisely to the subsets which are singletons.

- The multiplication maps subsets of subsets of into their union, which is a subset of . For example,

Another motivation for the theory monads is that a monad is like a consistent choice of spaces of formal expressions of a specific kind. In this interpretation, the endofunctor takes a space and gives a new space which can be thought of as containing formal expressions of a specific kind. One example is that of formal sums. If contains elements

then contains finite strings such as

The unit gives maps which assign to each element of the trivial formal expression containing only that one element. For example, for the formal sum case, is mapped to the trivial formal sum

in which nothing else is added to .

The multiplication gives maps from formal sums of formal sums (twice) to formal sums, which reduce the expression by removing the nesting. For example,

So far, all the operations are purely formal. There are spaces where these expressions can actually evaluated. For example, the sum

has the result . The spaces where these formal expressions have a result are called the algebras of the monad. In particular, an algebra of the monad consists of an object together with a map which intuitively assigns to an expression its result. Again, the map is required to satisfy some axioms (which say, for example, that the trivial formal expression has result ).

For a more detailed but still nontechnical introduction to monads you can take a look at Chapter 1 of my thesis (pdf).

Probability monads

The idea of a probability monad is that we want to assign to a space a new space containing random elements of . This is consistent with the two interpretations given above:

- random elements can be thought of as a generalization of ordinary elements;

- random elements can be thought of as formal convex combinations of ordinary elements.

Probability monads were introduced by Michèle Giry in her work A Categorical approach to probability theory (pdf). A possible way of interpreting her work is the following. First of all we consider a “base” category , whose objects we think of as spaces of possible outcomes, and whose morphisms we think of as functions between such spaces. For example, the outcomes of a coin flip form a set of two elements, . Spaces of outcomes may have additional structure, usually at least a sigma-algebra, or even a topology or metric, and the morphisms of are compatible with that extra structure.

The category is equipped with a monad , called probability monad, consisting of an endofunctor , and natural transformations and . Let’s see more in detail what these encode.

To each space of outcomes , the functor assigns a space of random outcomes. Usually, these are the probability measures on ,or sometimes valuations. For example, contains all formal convex combinations, that is formal real linear combinations with the coefficients lying between and and adding to . Here are some examples:

- ,

- ,

- ,

- .

For each space , the unit gives a natural map from into the space of random elements. It is usually an embedding, and it maps each (deterministic) outcome to the outcome assigning probability to and to everything else. The outcomes in the image of are, in other words, those that are not really random. For the example of the coin, we have

- .

For each space , the multiplication gives a natural map . The space can be thought of as containing laws of random variables whose law is also random, or “random random variables”.

Here is an example. Suppose that I have two different coins in my pocket, one with the usual Heads and Tails sides, and one that has two Heads. These coins have different laws: one has chance of Heads and chance of Tails, and the other one yields Heads with probability 1.

If I now keep both coins in my pocket and I draw one at random, and then flip it, there is probability of extracting the first coin, itself with law , and probability of extracting the second coin, itself with law . We can model the situation as

The space will contain elements of form “formal convex combinations of formal convex combinations” (nested, hence the brackets). Now, as you probably have thought already, the overall probability of obtaining Heads from this situation is , and that of Tails is . This is a usual random outcome, an element of . We have obtained it by averaging:

This averaging process is the map , the multiplication of the monad.

How this is done in practice may vary depending on the choice of base category, and of monad. In general, the convex combinations may be infinite, that is, expressed by an integral rather than just a sum. This is where most of the analytical difficulties come.

In the example above, we have never actually averaged between Heads and Tails: the space is not closed under taking averages, in any sense. However, there are many situations in which the average is not just a formal expression, but it has an actual result. For example, for real numbers, . An algebra of the probability monad is precisely a space which is equipped with a way of evaluating those formal convex combinations. That amounts to a map satisfying the usual laws of algebras of a monad. In other words, algebras of a probability monad tend to look like generalized convex spaces. The morphisms of algebras are then maps which commute with the convex structure, which we can think of as generalized affine maps. Taking averages, or expected values, is one of the most important operations in probability theory. The spaces where this can be done are exactly the algebras of a probability monad.

Again, more motivation for probability monads can be found in Chapter 1 of my thesis (pdf), in case you are interested!

The Kantorovich functor

Following the guidelines above, we now pick as base category the category of complete metric spaces, which we denote as :

- objects are complete metric spaces;

- morphisms are short maps, or 1-Lipschitz maps, which are the maps such that for all ,

You may know this category since, following Lawvere, it can be thought of as a category of enriched categories and functors (see for example this post, or the original paper).

Given a complete metric space , we define to be the space of Radon probability measures on with finite first moment. The latter condition means that the average distance is finite: has finite first moment if and only if

is a finite number. Equivalently, this is saying that all short maps are -integrable.

The metric that we assign to is the celebrated Kantorovich metric, also known as Kantorovich-Rubinstein metric, or 1-Wasserstein metric, or earth mover’s distance. The basic idea behind this metric is that it is a sort of convex extension of the metric of . If we view the space of probability measures as an extension of in which sits embedded via the unit map , it is natural to require that the metric on makes an isometric embedding, i.e. for all ,

This requirement makes Wasserstein metrics different from other metrics for probability measures (such as the total variational distance), in that point measures over neighboring points are themselves neighboring, even if they have no actual overlap. So Wasserstein metrics keep track nontrivially of the distance and topology of the underlying space. The condition above is of course not enough to determine the metric uniquely, we need to see how the metric works when the measures are nontrivial. Consider three points , and the probability measures and . We would like to lie between and . A possible choice is

This can be interpreted in the following way: half the mass of has to be moved from to , and the other half from to . Therefore the total cost of transport is

For this reason, this distance is sometimes called the earth mover’s distance. Another interpretation, in line with the idea of formal convex combinations, would be that the distance between formal convex combinations is the convex combination of the distances. If also is nontrivial, for example , there are at least two possible ways of moving the mass between and : moving the mass at to and the mass at to , or moving the mass at to and the mass at to , or even a combination of the two. In this case, the distance will be the optimal choice between these possibilities, that is

Since we are optimizing an affine functional and all the possibilities form a convex set, it is sufficient to optimize over the extreme points, which are permutations (in this case, of and ). This procedure, after taking a suitable limit, specifies the metric uniquely. Below, I’ll give a sketch of how this is done in practice.

On a short map , the functor gives the pushforward of measures between the spaces of probability measures over and . The fact that is short assures that the pushforward measures will have finite first moment too, and it is easy to check that the map will be short as well.

Colimit characterization

The Kantorovich functor can be expressed as a colimit over spaces of finite sequences. Let’s see how.

Let be a complete metric space. Among the measures of there are some which are particularly simple: let’s call simple measures the ones of the form

for points . These measures can be thought of as the empirical distributions coming from finite sequences . It is well-known that simple measures are dense in . In other words, with the Kantorovich metric, we can approximate any probability measure by a sequence of simple measures.

Thanks to the choice of our category, we can express this density property in the language of category theory as a colimit. In particular, for each , let denote the -th cartesian power of , equipped with the rescaled metric:

Let us now take a quotient space of with respect to permutations, effectively defining the space of -tuples of elements of up to reordering. In rigor, we define the space to be the quotient space of under the action of the symmetric group , with the quotient metric:

The spaces and are again complete metric spaces, objects of . Now the density result, intuitively, says that is in some sense the colimit over of the spaces . To make this precise, we have to give the indexing category over which to take the colimit. So let be the category whose objects are nonzero natural numbers, and with a morphism if and only if divides . We can construct a functor mapping the number to the space , and the morphism (for natural numbers) to the function which copies times the sequence :

The construction is also functorial in : given a map we get a map by just applying elementwise. The fact that simple measures are dense in now reads as follows.

Theorem. The space of probability measures can be expressed as the following colimit:

A similar statement can also be given using the , without quotienting over permutations (see our paper for more details on this).

Monad structure arising from the colimit

We have seen that we can determine the functor in terms of the spaces of finite sequences . The monad structure of can be also defined purely in terms of finite sequences, in a way which is quite close to how one uses integration in practice (at least, in the interpretation given above). Let’s see how. First of all, let and be natural numbers, and consider the elements of , which are the sequences of sequences, in the form

There is a map which we can think of as “removing the inner brackets”, or “flattening the array”:

This map is already the “core” of the “averaging” map which gives the multiplication of the monad: the empirical distribution of the sequence

is exactly the average of the empirical distributions of the sequences

We can make the statement concrete by appealing to the universal property of . It turns out that there is a unique map extending by continuity the maps . This map is precisely the integration, and this way it is even defined without mentioning measure theory at all.

The fact that the resulting satisfies the monad axioms comes from the fact that the satisfy similar axioms, which can interpreted in terms of adding and removing brackets.

For example, for the analogue of the associativity diagram for the , suppose we are given , and we consider a triple sequence, i.e. an element of in the form

We can first remove the innermost brackets to obtain

and then remove the remaining inner brackets to obtain

Or we can first remove the middle-level brackets to obtain

and then remove the remaining inner brackets to obtain

In the two cases the result is the same. This, after taking the colimit, implies that the associativity diagram for commutes.

In particular, the functors (and also ) are said to form a graded monad. For the theory of graded monads see the paper

- Fujii, Katsumata and Melliès, Towards a formal theory of graded monads. In: Jacobs B., Löding C. (eds) Foundations of Software Science and Computation Structures. FoSSaCS 2016. Lecture Notes in Computer Science, vol 9634. Springer, Berlin, Heidelberg. (pdf)

In general, the statement is that under some mild conditions, the colimit of a graded monad is a (traditional) monad.

Theorem. The functor can be equipped with a unique monad structure induced by the maps and the universal property of .

Algebras

We have said above that algebras of a probability monad tend to look like convex spaces. This is the case for the Kantorovich monad too. In particular, algebras have to be objects of our category, complete metric spaces. An ideal candidate is then closed convex subsets of Banach spaces, since they are complete metric spaces, they are convex, and the convex and metric structures interact nicely with each other. This turns out to be indeed the case:

Theorem. The algebras of the Kantorovich monad are exactly the closed convex subsets of Banach spaces, with the induced metric and convex structure.

The proof of this statement relies on the colimit characterization above, and on previous work of Tobias with Valerio Capraro, which in turn is based on the celebrated Stone’s theorem for convex structures. More information about this can be also found in David Corfield’s post here on the Café about convex spaces. Therefore, the Kantorovich monad gives a framework for treating categorically random variables with values in Banach spaces.

Further questions and reading

Some questions arise from the construction of this monad.

- Is there a way of obtaining the law of large numbers, or a related result, as a category-theoretical statement?

- Are there generalizations to Lawvere metric spaces?

A more algebraic question is the following.

- Which other monads arise as colimits of graded monads, how general is the construction?

If you want to know more, there are the papers of Tobias and myself on the topic (here, here, and here), my PhD thesis (pdf), more work is still in progress. There is also Tobias’ recent talk at MIT. Tobias and I are also working on a project at the Applied Category Theory Adjoint School this year, together with Nathan Bedell, Carmen Constantin, Martin Lundfall, and Brandon Shapiro. The project partly involves the Kantorovich monad, and there will be posts about it on this blog in the coming weeks. So, stay tuned!

Re: The Kantorovich Monad

I’m writing in a hurry, and haven’t thought/read properly, but is there any relation between this monad and the adjunction between the category of (bounded?) metric spaces and the category of Banach spaces, for which the “free” Banach space on a given metric space is sometimes called the Arens–Eells space? (The dual of the Arens–Eells space of X is the space of Lipschitz functions on X.)