The Uniform Measure

Posted by Tom Leinster

Category theory has an excellent track record of formalizing intuitive statements of the form “this is the canonical such and such”. It has been especially effective in topology and algebra.

But what does it have to say about canonical measures? On many spaces, there is a choice of probability measure that seems canonical, or at least obvious: the first one that most people think of. For instance:

On a finite space, the obvious probability measure is the uniform one.

On a compact metric space whose isometry group acts transitively, the obvious probability measure is Haar measure.

On a subset of , the obvious probability measure is normalized Lebesgue measure (at least, assuming the subset has finite nonzero volume).

Emily Roff and I found a general recipe for assigning a canonical probability measure to a space, capturing all three examples above: arXiv:1908.11184. We call it the uniform measure. It’s categorically inspired rather than genuinely categorical, but I think it’s a nice story, and I’ll tell it now.

Tony goes swimming

Let’s warm up with a hypothetical scenario. Tony goes swimming once a week, on a variable day. What probability distribution on should we use to model his swimming habits?

If we have no information whatsoever, it’s got to be the uniform distribution , purely by symmetry.

But now we might bring to bear our knowledge that swimming is a leisure activity, which makes it more likely to happen at weekends. Or perhaps Tony slips us a definite clue: he goes at weekends exactly as often as he goes on weekdays. Of course there are many distributions that satisfy this constraint, but again, symmetry compels us to choose

as the canonical answer.

Symmetry only gets us so far, though. What if Tony also tells us that the probability he goes on Friday is equal to the sum of Wednesday’s and Thursday’s probabilities? Any distribution

with satisfies the known constraints and the obvious symmetry requirements, and it’s not clear which of them should be regarded as “canonical”.

What are we doing?

What’s happening here is that we’re looking for the most uniform distribution possible, but except in simple cases, we can’t say what that means without some way of quantifying uniformity. So, let’s think about that. How uniform is a given probability measure on a space?

You may have guessed that this has something to do with entropy, and you’d be right. But I want to explain all this from the ground up, motivating everything from first principles, without invoking the E word. Also, if you guessed that what we’re going to end up with is a maximum entropy distribution, you’d only be half right. There are actually two key ideas here, and maximizing entropy is only one of them.

How spread out is a distribution?

The first key idea is to look for the most spread-out distribution possible on a space. I won’t say just yet what kind of “space” Emily and I worked with, but they include metric spaces, so you can keep that family of examples in mind.

Let’s consider this subset of :

It’s drawn here with an even shading, which corresponds to the uniform distribution — I mean, Lebesgue measure, normalized to give the space a total measure of . But of course there are other probability measures on it, like this one with a single area of high concentration (shaded darker) —

— or this one, with two areas of high concentration —

Which one is the most spread out?

Of course, it depends what “spread out” means. It’s pretty clearly not the second one, where most of the mass is concentrated in the centre. But arguably the third is more spread out than the first, uniform, distribution: relative to the uniform distribution, some of the mass has been pushed out to the sides.

Or take this simpler example. Consider all probability measures on a line segment of length , and let’s temporarily define the “spread” of a distribution on the line as the expected distance between a pair of points chosen randomly according to that distribution. An easy little calculation shows that with the uniform distribution, the average distance between points is . But we can do better: if we put half the mass at one endpoint and half at the other, then the average distance between points is . So the uniform distribution isn’t always the most spread out! (I don’t know which distribution is.)

This measure of spread isn’t actually the one we’ll use. What we’ll work with is not the distance between points, but the similarity between them.

Measuring spread

Formally, take a compact Hausdorff space and a “similarity kernel” on it, which means a continuous function such that for every point . You can obtain such a space from a compact metric space by putting . That’s the most important family of examples.

Suppose we also have a probability measure on . (Formally, “measure” means “Radon measure”.) We can quantify how ordinary or typical a point is with respect to the measure — in other words, how dark you’d colour it in pictures like the ones above. The typicality of is

It’s the expected similarity between and a random point. The higher it is, the more concentrated the measure is near .

The mean typicality of a point in is

This is high if the measure is highly concentrated. For instance, if we’re dealing with a metric space then always lies between and , so the maximum possible value this can have is , which is attained if and only if is concentrated at a single point — a Dirac delta. So, quantifies the lack of spread. Hence

quantifies the spread of the measure across the space .

But this is just one way of quantifying spread! More generally, instead of taking the arithmetic mean of the ordinariness (which is what is), we can take any power mean. Then we end up with

as our measure of spread, for any real .

For reasons I won’t go into, it’s convenient to reparametrize with and it’s sensible to restrict to . Simplifying, our formula becomes

(). And although this formula doesn’t make sense when , taking limits as gives the righteous definition there:

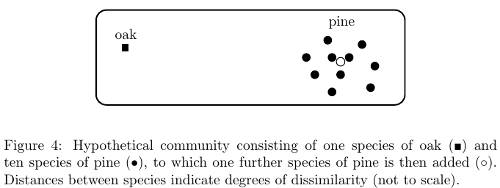

If this sounds familiar, it might be because Christina Cobbold and I used as measures of biological diversity. Here is to be thought of as a finite set of species, indicates the degree of similarity between species (genetic, for instance), and is the relative abundance distribution of the species in some ecological community. High values of indicate a highly diverse community. The parameter controls the relative emphasis placed on typical or atypical species: e.g. gives atypical species as much importance as typical ones, while depends only on the most typical species of all.

In any case, Emily and I call the diversity of of order . Its logarithm,

is the entropy of of order . In the special case where is finite and

the entropy is the Rényi entropy of order , and in the even more special case where also , it’s the Shannon entropy.

For today it doesn’t matter whether we use the diversities or the entropies , since all we’re interested in is maximizing them, and logarithm is an increasing function. So “diversity” and “entropy” mean essentially the same thing, and in this geometric context, they’re our formalization of the idea of “spread-outness”.

What’s the most spread-out distribution of them all?

Fix a space with a similarity kernel , as above. You won’t lose much if you assume it’s a metric space with if you like, but in any case, I’ll assume now that is symmetric. (The theorem I’m about to state needs this.)

Two questions:

Which probability measure on maximizes the diversity ?

What is the value of the maximum diversity, ?

We’ve already observed that if we want to maximize diversity (“spread-outness”), the uniform distribution might not be best. We saw that for the line segment and the potato shapes. Another simple example is a three-point space consisting of two points very close together and the third far away. You wouldn’t want to use the uniform distribution, as that would put of the weight at one end and at the other. Something closer to would be more spread out.

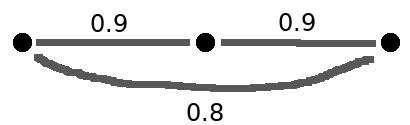

So the answers to these questions aren’t going to be simple. But also, there’s an elephant in the room: both answers surely depend on ! After all, changing changes , and different values of sometimes have conflicting ideas about when one probability measure is more spread out than another. It can happen, for instance, that

for probability measures and on .

However, Emily and I prove that it doesn’t actually matter! The answers to both questions are miraculously independent of . That is:

There is some probability measure that maximizes for all simultaneously.

The maximum diversity is the same for all .

If this sounds familiar, it might be because Mark Meckes and I proved it in the case of a finite space . Extending it to compact spaces turned out to be much harder than anticipated. For instance, part of the proof is to show that is continuous in , which in the finite case is pretty much a triviality, but in the compact case involves a partition of unity argument and takes up several pages of Emily’s and my paper.

What matters here is the first bullet point: there’s a best of all possible worlds, a probability measure on our space that unambiguously maximizes diversity (or entropy, or spread). Sometimes there’s more than one such measure. But in many examples, including many of the most interesting ones, there’s only one, so here I’ll casually refer to it as the most spread out measure on .

Back to the line

The simplest nontrivial example is a line segment. What’s its most spread out measure?

Crucially, the answer depends on how long the line is. It’s a linear combination of 1-dimensional Lebesgue measure and a Dirac delta at each end, but the coefficients change with the length. I could write down the formula, which is simple enough, but that would distract from the main point:

As the length increases to , the most spread out measure converges to normalized Lebesgue.

In other words, the Dirac measures at the end fade to nothing as we scale up.

The formal statement is this: if we write

then for each real , the space with similarity kernel has a unique most spread out measure , and in the weak topology on the space of probability measures, converges to the normalized Lebesgue measure on as .

Another term for “normalized Lebesgue measure” on the line is “uniform measure”. So in this example at least:

The uniform measure is the large-scale limit of the most spread out measure.

We’re going to take the lesson of this example and turn it into a general definition.

Defining the uniform measure

Here goes. Let be a compact metric space. Suppose that for , its rescaling has a unique most spread out measure , and that has a limit in the weak topology as . Then the uniform measure on is that limit:

Conceptually, the difference between the “most spread out” measures and the uniform measure is that depends on the scale factor (as in the example of the line segment), but doesn’t. The uniform measure is independent of scale: for all . That’s one of the properties that makes the uniform measure canonical.

In summary, the first key idea behind the definition of uniform measure is to take the most spread out (maximum entropy) distribution, and the second key idea is to then pass to the large-scale limit.

Recapturing the three examples

Back at the start of the post, I claimed that our notion of uniform measure captured three intuitive examples of the “canonical measure” on a space. Let’s check back in on them.

Finite spaces. For a finite metric space, the most spread out measure is not usually uniform, as we’ve seen. But as we scale up, it always converges to what’s usually called the uniform measure. In other words, what Emily and I call the uniform measure is, in this case, what everyone else calls the uniform measure.

One way to think about this is as follows. In general, to get the uniform measure on a space , we take the most spread out measure on for each , then pass to the limit as to get . But for a finite space, we can do these two processes in the opposite order: first take the limit as of , giving us a copy of where all distances between distinct points are , and then take the most spread out measure on that space, which trivially is the one that gives equal measure to each point. This is just a story I tell myself: I know of no conceptual reason why interchanging the order of the processes should give the same result, and in any case the story only makes sense for finite , since otherwise we escape the world of compact spaces. But perhaps it’s a helpful story.

Homogeneous space. Now take a compact metric space whose isometry group acts transitively on points. A version of the Haar measure theorem states that there’s a unique isometry-invariant probability measure on . And it can be shown that the most spread out measure on is just . Taking the limit as , the uniform measure on is, therefore, also .

There’s a caveat here: the proof assumes that the metric space is of negative type, a classical condition that I don’t want to go into now. Many spaces are of negative type, including all subspaces of . But it would be nice to now whether the result also holds for spaces that aren’t of negative type.

(And to be more careful than I really want to be in a blog post, it’s assumed here and in many other places that the space concerned is nonempty.)

Subsets of . In the case of a line segment, we saw that the uniform measure is the uniform measure in the usual sense (normalized Lebesgue). What about subsets of in general?

Let’s consider just those compact subsets of that have nonzero measure. Then we can restrict Lebesgue measure to and normalize it to give a total measure of . Is this canonical probability measure on the same as the uniform measure that Emily and I define?

It is, and we prove it in our paper. A crucial role is played by a result of Mark Meckes: every compact subset of has a unique most spread out measure. (The proof is Fourier-analytic.) But the point I want to emphasize is that unlike in the previous two examples, we have no idea how to describe for finite scale factors !

However, despite not knowing for finite , we can describe the limit of as — in other words, the uniform measure on . And as promised, it’s precisely normalized Lebesgue.

Re: The Uniform Measure

Apologies to Simon for using the word “spread” so much, when he’s already defined it to mean something different (although related). I couldn’t think of a decent synonym.